Dr. Epstein’s Published Research

Dr. Epstein has discovered and quantified these 10 manipulation techniques.

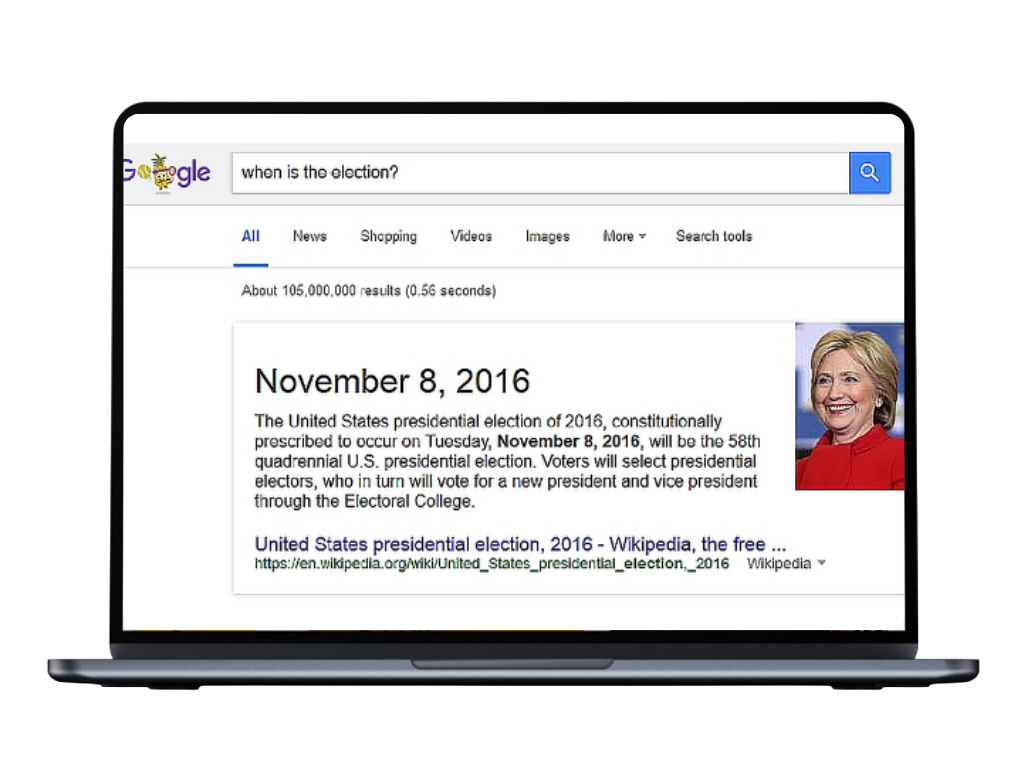

The Search Engine Manipulation Effect (SEME)

Discovered in 2013, Published by PNAS on August 4, 2015

Dr. Epstein’s initial research into SEME started way back in 2013. He realized that the first few search results of any search are chosen way more than those that come after. More than 66% of people choose from the first 5 search results, and more than 90% don’t look past the first page.

Dr. Epstein then asked, what if these top results had a bias?

In his experiments, Dr. Epstein found that biased search results sway voters by upwards of 20%, and even up to 80% in some demographics.

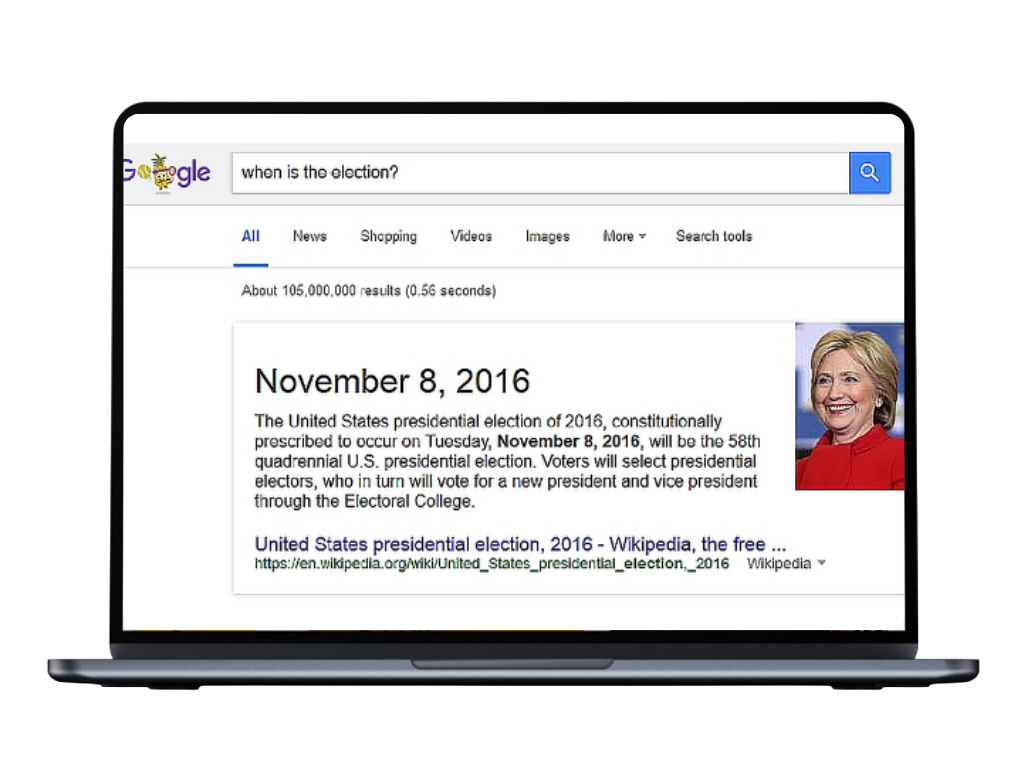

The Search Engine Manipulation Effect (SEME)

Discovered in 2013, Published by PNAS on August 4, 2015

Dr. Epstein’s initial research into SEME started way back in 2013. He realized that the first few search results of any search are chosen way more than those that come after. More than 66% of people choose from the first 5 search results, and more than 90% don’t look past the first page.

Dr. Epstein then asked, what if these top results had a bias?

In his experiments, Dr. Epstein found that biased search results sway voters by upwards of 20%, and even up to 80% in some demographics.

The Answer Bot Effect (ABE)

Discovered in 2016, Published by PLOS ONE on June 1, 2022

Dr. Epstein’s next discovery exposed the power that biased answers from search engine answer boxes and IPAs (Intelligent personal assistants) like Alexa can have. He learned that feeding people only one result instead of a giving them a list makes people think that there’s only 1 right answer. But of course, this ‘right answer’ is just a Big Tech executive’s bias.

This manipulation tactic can sway voting preferences from anywhere between 38-65%

The Answer Bot Effect (ABE)

Discovered in 2016, Published by PLOS ONE on June 1, 2022

Dr. Epstein’s next discovery exposed the power that biased answers from search engine answer boxes and IPAs (Intelligent personal assistants) like Alexa can have. He learned that feeding people only one result instead of a giving them a list makes people think that there’s only 1 right answer. But of course, this ‘right answer’ is just a Big Tech executive’s bias.

This manipulation tactic can sway voting preferences from anywhere between 38-65%

The Targeted Messaging Effect (TME)

Discovered in 2016, Published by PLOS ONE on July 27, 2023

Dr. Epstein asked what would happen if a Big Tech company like twitter sent important political messages only to undecided voters, leaving out those whose minds were already made up. He learned–to no one’s surprise–that if Big Tech did this, they’d be able to sway the opinions of their targeted demographic by up to 87% in some cases. And in the same experiments that produced this 87%, only 2% of individuals knew that they were being manipulated.

The Targeted Messaging Effect (TME)

Discovered in 2016, Published by PLOS ONE on July 27, 2023

Dr. Epstein asked what would happen if a Big Tech company like twitter sent important political messages only to undecided voters, leaving out those whose minds were already made up. He learned–to no one’s surprise–that if Big Tech did this, they’d be able to sway the opinions of their targeted demographic by up to 87% in some cases. And in the same experiments that produced this 87%, only 2% of individuals knew that they were being manipulated.

The Video Manipulation Effect (VME)

Discovered in 2021, Published by SSRN on 2 Aug 2023

The Video Manipulation Effect is all about video streaming services’ up next or recommended videos. In 2021, YouTube reported that 70% of the site’s total views comes from YouTube recommendations. Are these recommendations biased? Well, YouTube is owned by Google, so we’ll let you decide.

Dr. Epstein’s findings show that adjusting the recommendation algorithm can influence voters’ opinions by up to 65%, and simple masking techniques can be used to make any and all bias nearly undetectable while also boosting the effectiveness of manipulation.

The Video Manipulation Effect (VME)

Discovered in 2021, Published by SSRN on 2 Aug 2023

The Video Manipulation Effect is all about video streaming services’ up next or recommended videos. In 2021, YouTube reported that 70% of the site’s total views comes from YouTube recommendations. Are these recommendations biased? Well, YouTube is owned by Google, so we’ll let you decide.

Dr. Epstein’s findings show that adjusting the recommendation algorithm can influence voters’ opinions by up to 65%, and simple masking techniques can be used to make any and all bias nearly undetectable while also boosting the effectiveness of manipulation.

The Opinion Matching Effect (OME)

Discovered in 2022, Published by SSRN on 9 Aug 2023

Have you ever taken an online quiz? If yes, you may have seen the Opinion Matching Effect firsthand. Dr. Epstein asked the question, what if online political quizzes were biased?

A study conducted with 773 undecided US voters revealed this startling effect. Voters were instructed to take a quiz designed to match them with their ideological candidate or party. They were told the quiz was unbiased when in reality it was anything but. This resulted in voter preferences shifting significantly towards the candidate the quiz was programmed to favor. This shift wasn’t just marginal; it ranged from 51% to 95% of those who initially supported the favored candidate, showing a substantial influence. These quizzes operate on algorithms that might not be as impartial as they seem.

“Awareness of the bias doesn’t protect you from the bias. If anything, it makes you more vulnerable” – Dr. Epstein

The Opinion Matching Effect (OME)

Discovered in 2022, Published by SSRN on 9 Aug 2023

Have you ever taken an online quiz? If yes, you may have seen the Opinion Matching Effect firsthand. Dr. Epstein asked the question, what if online political quizzes were biased?

A study conducted with 773 undecided US voters revealed this startling effect. Voters were instructed to take a quiz designed to match them with their ideological candidate or party. They were told the quiz was unbiased when in reality it was anything but. This resulted in voter preferences shifting significantly towards the candidate the quiz was programmed to favor. This shift wasn’t just marginal; it ranged from 51% to 95% of those who initially supported the favored candidate, showing a substantial influence. These quizzes operate on algorithms that might not be as impartial as they seem.

“Awareness of the bias doesn’t protect you from the bias. If anything, it makes you more vulnerable” – Dr. Epstein

The Digital Personalization Effect (DPE)

Discovered in 2023, Presented at the 104th annual meeting of the Western Psychological Association on April 24, 2024

The Digital Personalization Effect observes how personalization can dramatically increase the impact of biased online content. The results of highly personalized manipulation can shift voter preferences by up to 70%! Big Tech has collected so much data about the content that we do and do not enjoy that they can recommend to us news stories that come from a source we trust and still show us the narratives and bias that they want us to see. When they personalize content it has a much bigger impact.

The Digital Personalization Effect (DPE)

Discovered in 2023, Presented at the 104th annual meeting of the Western Psychological Association on April 24, 2024

The Digital Personalization Effect observes how personalization can dramatically increase the impact of biased online content. The results of highly personalized manipulation can shift voter preferences by up to 70%! Big Tech has collected so much data about the content that we do and do not enjoy that they can recommend to us news stories that come from a source we trust and still show us the narratives and bias that they want us to see. When they personalize content it has a much bigger impact.

The Differential Demographics Effect (DDE)

Discovered in 2023, Presented at the 104th annual meeting of the Western Psychological Association on April 24, 2024

Are certain demographics more susceptible to a specific manipulation tactic than others? Dr. Epstein’s findings show that every demographic you can think of—race, gender, whether you wear glasses or not—every demographic can be a target of Big Tech’s manipulation. He also found that if Big Tech sends a targeted message to everyone, both inside and out of their target demographic, Big Tech can still isolate and manipulate their target demographic while seemingly maintaining neutrality. It must be fair since they sent the same message to everyone, right? Well, the message was never intended for all of us…

The Differential Demographics Effect (DDE)

Discovered in 2023, Presented at the 104th annual meeting of the Western Psychological Association on April 24, 2024

Are certain demographics more susceptible to a specific manipulation tactic than others? Dr. Epstein’s findings show that every demographic you can think of—race, gender, whether you wear glasses or not—every demographic can be a target of Big Tech’s manipulation. He also found that if Big Tech sends a targeted message to everyone, both inside and out of their target demographic, Big Tech can still isolate and manipulate their target demographic while seemingly maintaining neutrality. It must be fair since they sent the same message to everyone, right? Well, the message was never intended for all of us…

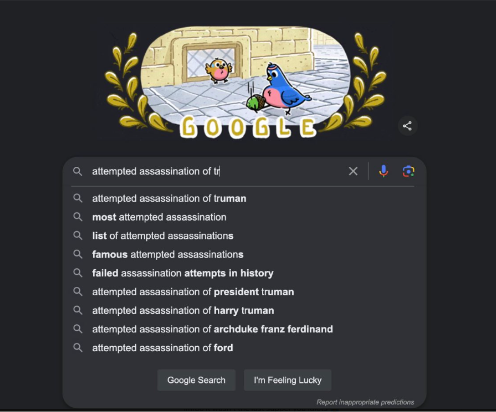

The Search Suggestion Effect (SSE)

Discovered in 2013, Published by ScienceDirect on 22 June 2024

The Search Suggestion Effect (SSE), discovered by Dr. Epstein in 2013, quantifies how search suggestions, or “autocomplete” suggestions, impact user behavior. Search suggestions have an impact on opinions, preferences, and votes! Google’s search suggestions can shift undecided voters’ opinions from a 50/50 split to almost 90/10—all without users’ awareness. In a notable experiment conducted before the 2016 presidential election, participants were presented with search suggestions related to political candidates, including both negative and neutral options. The results showed that negative suggestions garnered significantly more clicks—about 40% of the total—compared to neutral ones. This effect was even more pronounced among undecided voters, who clicked on negative suggestions over ten times more frequently than on neutral suggestions. If google decides to suppress negative search suggestions for one political candidate while suppressing positive search results for the other one, they can easily manipulate us.

The Search Suggestion Effect (SSE)

Discovered in 2013, Published by ScienceDirect on 22 June 2024

The Search Suggestion Effect (SSE), discovered by Dr. Epstein in 2013, quantifies how search suggestions, or “autocomplete” suggestions, impact user behavior. Search suggestions have an impact on opinions, preferences, and votes! Google’s search suggestions can shift undecided voters’ opinions from a 50/50 split to almost 90/10—all without users’ awareness. In a notable experiment conducted before the 2016 presidential election, participants were presented with search suggestions related to political candidates, including both negative and neutral options. The results showed that negative suggestions garnered significantly more clicks—about 40% of the total—compared to neutral ones. This effect was even more pronounced among undecided voters, who clicked on negative suggestions over ten times more frequently than on neutral suggestions. If google decides to suppress negative search suggestions for one political candidate while suppressing positive search results for the other one, they can easily manipulate us.

The Multiple Exposure Effect (MEE)

Discovered in 2024, Published by SSRN on August 02, 2024

Dr. Epstein wanted to show that manipulation is more successful on people who are exposed to similarly biased content over a longer period of time. To prove this, Dr. Epstein needed to manipulate the participants of his study with successive instances of bias. In the case of Dr. Epstein’s Alexa simulator, voter manipulation from biased answers started from 71% on his Alexa’s first biased response, and it ended in a staggering 98% of votes being swayed.

Before the Multiple Exposure Effect, Dr. Epstein’s research had always been a one-off round of questioning. In other words, an experiment of his might have looked like: participants had an initial opinion, then they were shown biased content, and finally they were asked if their opinion had changed. The Multiple Exposure Effect shows that when you expose someone to similarly biased content over a longer period of time, it’s more effective than just a one-off.

Google doesn’t do one-offs.

The Multiple Exposure Effect (MEE)

Discovered in 2024, Published by SSRN on August 02, 2024

Dr. Epstein wanted to show that manipulation is more successful on people who are exposed to similarly biased content over a longer period of time. To prove this, Dr. Epstein needed to manipulate the participants of his study with successive instances of bias. In the case of Dr. Epstein’s Alexa simulator, voter manipulation from biased answers started from 71% on his Alexa’s first biased response, and it ended in a staggering 98% of votes being swayed.

Before the Multiple Exposure Effect, Dr. Epstein’s research had always been a one-off round of questioning. In other words, an experiment of his might have looked like: participants had an initial opinion, then they were shown biased content, and finally they were asked if their opinion had changed. The Multiple Exposure Effect shows that when you expose someone to similarly biased content over a longer period of time, it’s more effective than just a one-off.

Google doesn’t do one-offs.

The Multiple Platforms Effect (MPE)

Publish Date TBD

What would happen if someone blindly trusted search results, search suggestions, up next video recommendations, etc.? When you compound all of these internet manipulation effects together, what you’re left with is a population of individuals being exposed to similarly biased content on every platform they use, and for every second of the day. This may sound like one of those predatory ads we see all the time, and that’s because it’s very similar. The difference is that when it comes to Google, very few can tell that what’s happening is predatory.

Google is the largest ad agency in the world.

The Multiple Platforms Effect (MPE)

Publish Date TBD

What would happen if someone blindly trusted search results, search suggestions, up next video recommendations, etc.? When you compound all of these internet manipulation effects together, what you’re left with is a population of individuals being exposed to similarly biased content on every platform they use, and for every second of the day. This may sound like one of those predatory ads we see all the time, and that’s because it’s very similar. The difference is that when it comes to Google, very few can tell that what’s happening is predatory.

Google is the largest ad agency in the world.